Exercise 2.67 -- 2.72

Exercise 2.67

Define an encoding tree and a sample message:

(define sample-tree

(make-code-tree (make-leaf `A 4)

(make-code-tree

(make-leaf `B 2)

(make-code-tree (make-leaf `D 1)

(make-leaf `C 1)))))

(define sample-message `(0 1 1 0 0 1 0 1 0 1 1 1 0))Use the decode procedure to decode the message, and give the result.

Solution

(decode sample-message sample-tree)

; (a d a b b c a)Exercise 2.68

The encode procedure takes as arguments a message and a tree and

produces the list of bits thta gives the encoded message.

(define (encode message tree)

(if (null? message)

'()

(append (encode-symbol (car message) tree)

(encode (cdr message) tree))))Encode-symbol is a procedure, which you must write, that returns the

list of bits that encodes a given symbol according to a given tree.

You should design encode-symbol so that it signals an error is the

symbol is not in the tree at all. Test your procedure by encoding the

result obtained in Exercise 2.67 with the sample tree and

seeing whether it is the same as the original sample message.

Solution

(define (encode-symbol symbol tree)

(cond ((leaf? tree) '())

((element-of-set? symbol (symbols (left-branch tree)))

(cons 0 (encode-symbol symbol (left-branch tree))))

((element-of-set? symbol (symbols (right-branch tree)))

(cons 1 (encode-symbol symbol (right-branch tree))))

(else (error "symbol not in tree - ENCODE-SYMBOL" ))))

(encode (decode sample-message sample-tree) sample-tree)

; (0 1 1 0 0 1 0 1 0 1 1 1 0)Exercise 2.69

The following procedure takes as its argument a list of symbol-frequency pairs (where no symbol appears in more than one pair) and generates a Huffman encoding tree according to the Huffman encoding algorithm.

(define (generate-huffman-tree pairs)

(successive-merge (make-leaf-set pairs)))Make-leaf-set is the procedure given above that transforms the list

of pairs into an ordered set of leaves. Successive-merge is the

procedure you must write, using make-code-tree to successively merge

the smallest-weight elements of the set until there is only one

element left, which is the desired Huffman tree. (This procedure is

slightly tricky, but not really complicated. If you find yourself

designing a complex procedure, then you are almost certainly doing

something wrong. You can take significant advantage of the fact that

we are using an ordered set representation.)

Solution

(define (successive-merge leaf-set)

(if (null? (cdr leaf-set))

(car leaf-set)

(successive-merge

(adjoin-set

(make-code-tree (cadr leaf-set)

(car leaf-set))

(cddr leaf-set)))))Exercise 2.70

The following eight-symbol alphabet with associated relative frequencies was designed to efficiently encode the lyrics of 1950s rock songs. (Note that the “symbols” of an “alphabet” need not be individual letters.)

A 2 NA 16

BOOM 1 SHA 3

GET 2 YIP 9

JOB 2 WAH 1

Use generate-huffman-tree to generate a corresponding Huffman tree,

and use encode to encode the following message:

Get a job

Sha na na na na na na na na

Get a job

Sha na na na na na na na na

Wah yip yip yip yip yip yip yip yip yip

Sha boom

How man bits are required for the encoding? What is the smallest number of bits that would be needed to encode this song if we used a fixed-length code for the eight-symbol alphabet?

Solution

(define pairs

'((A 2) (NA 16)

(BOOM 1) (SHA 3)

(GET 2) (YIP 9)

(JOB 2) (WAH 1)))

(define message

'(Get a job

Sha na na na na na na na na

Get a job

Sha na na na na na na na na

Wah yip yip yip yip yip yip yip yip yip

Sha boom))

(define tree

(generate-huffman-tree pairs))

(encode message tree)

; (0 0 0 0 0 0 0 1 1 0 0 0 0 1 0 0 0 1 1 1 1 1 1 1 1 1 0 0 0 0 0 0 0 1

; 1 0 0 0 0 1 0 0 0 1 1 1 1 1 1 1 1 1 0 0 1 0 1 0 1 0 1 0 1 0 1 0 1 0

; 1 0 1 0 1 0 1 0 0 0 1 0 0 1 0 0)

(length (encode message tree))

; 84If we used fixed-length encoding, that is, by using 3 bits to encode each symbol, the number of bit need to encod the message:

(* 3 (length message))

; 108Exercise 2.71

Suppose we have a Huffman tree for an alphabet of $n$ symbols, and that the relative frequencies of the symbols are $1,2,4,\ldots,2^{n-1}$. Sketch the tree for $n=5$; for $n=10$. In such a tree (for general $n$) how many bits are required to encode the most frequent symbol? The least frequent symbol?

Solution

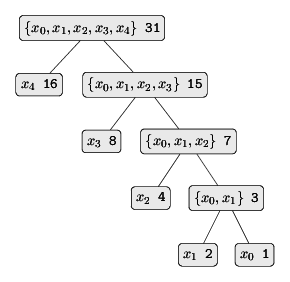

The Huffman encoding tree for $n=5$:

Tikz code here

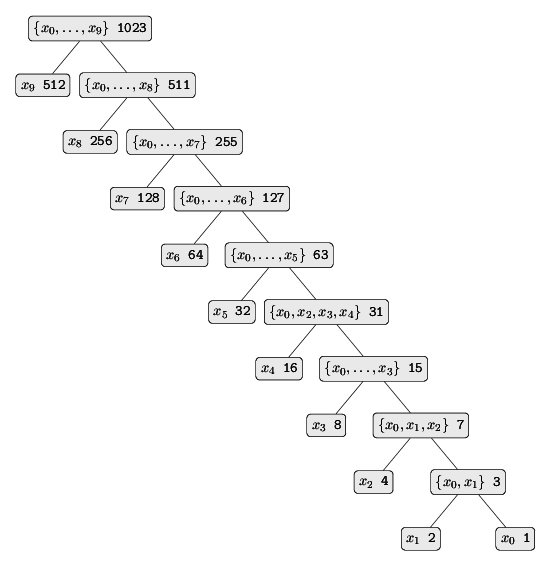

And thre Huffman encoding tree for $n=10$:

Tikz code here

For an alphabet with $n$ symbols, the most frequency symbol can be encoded with 1 bit and the least frequent symbol with $n-1$ bits.

Exercise 2.72

Consider the encoding procedure that you designed in Exercise 2.68. What is the order of growth in the number of steps needed to encode a symbol? Be sure to include the number of steps needed to search the symbol list at each node encountered. To anser this question in general is difficult. Consider the special case where the relative frequencies of the $n$ symbols are as described in Exercise 2.71, and give the order of growth (as a function of $n$) of the number of steps needed to encode the most frequent and least frequent symbols in the alphabet.

Solution

We will check the order of growth for encode-symbol by timing its

performance as we increase the alphabet size.

The procedure make-my-tree-1, which takes an integer argument n,

creates an encoding tree for an alphabet with n symbols, where all

symbols have a relative frequency of 1. Random-symbols-1, taking

an integer argument n, creates a list of 1000 symbols randomly

drawing from an alphabet of n symbols, where all symbols have the

same frequency.

(define (make-my-tree-1 n)

; all symbols have same frequency

(define (make-my-list n)

(if (< n 0)

'()

(cons (list n 1) (make-my-list (- n 1)))))

(generate-huffman-tree (make-my-list n)))

(define (random-symbols-1 n)

; a list of 1000 random numbers, between 0 and n (inclusive)

(map (lambda (i) (random n)) (iota 1000)))To time the encoding process:

(define (time-encoding symbols tree start-time)

(map (lambda (s) (encode-symbol s tree)) symbols)

(display (- (runtime) start-time)))We can use this to time the encoding of 1000 random symbols from a 1000 symbol alphabet:

(define t (make-my-tree-1 1000))

(define s (random-symbols-1 1000))

(time-encoding s t (runtime))

; .8300000000000001We repeat this for alphabets of size 2000, 3000, 4000, and 5000. The table below clear indicates linear ($O(n)$) growth in time:

| Alphabet size | Time (s) |

|---|---|

| 1000 | 0.83 |

| 2000 | 1.59 |

| 3000 | 2.51 |

| 4000 | 3.20 |

| 5000 | 4.15 |

For the alphabet with frequencies described in Exercise 2.71

(here random-symbols-2 create a random list of length 10000 – we

have used a longer list to get more reliable timings):

(define (make-my-tree-2 n)

; symbol/frequency as described in Ex2.71

(define (make-my-list n)

(if (< n 0)

'()

(cons (list n (expt 2 n)) (make-my-list (- n 1)))))

(generate-huffman-tree (make-my-list n)))

(define (random-symbols-2 n)

(let ((d (- (expt 2 (+ n 1)) 1)))

(define (f x m)

(if (<= x (/ (expt 2 m) d))

m

(f (- x (/ (expt 2 m) d)) (- m 1))))

(map (lambda (i) (f (random 1.0) n))

(iota 10000))))

(define t (make-my-tree-2 1000))

(define s (random-symbols-2 1000))

(time-encoding s t (runtime))

; .10000000000000142The table below suggests that encoding time here is constant ($O(1)$).

| Alphabet size | Time (s) |

|---|---|

| 1000 | 0.10 |

| 2000 | 0.12 |

| 3000 | 0.10 |

| 4000 | 0.10 |

| 5000 | 0.09 |